UPDATE (August 28, 2023): I received correspondence from Bing Support confirming that we have been restored to Bing’s index. As I noted in the first update, I am leaving the body of this article as it was when I originally published it.

UPDATE (August 21, 2023): Bing began re-indexing our pages on July 26, 2023, and we began seeing signs of life in our referrals from Bing and DuckDuckGo on August 18, 2023. While I have not received formal confirmation that our mysterious, unexplained problems with Bing have been resolved, it appears that things are moving in the right direction. Please note that the article below is unchanged from its original publication and will not reflect the updated information.

Introduction

Our domain (thenewleafjournal.com) was blacklisted from appearing in Bing web search results on January 14, 2023. My attempts to resolve the issue have been unsuccessful – so our blacklisting persists through April 25, 2023. I long thought about writing an article about our experience to add to the growing list of small website Bing horror stories, but never quite got around to adding my two cents. Perhaps what I needed was for someone to do my work for me. I came across a post by Mr. Dariusz Więckiewicz titled Bing Jail, which he shared to Hacker News. Mr. Więckiewicz describes his experience with his website being blacklisted by Bing. I discovered from his informative post that his experience with the Bing blacklist is almost on all fours with what has happened to The New Leaf Journal.

I decided to use Mr. Więckiewicz’s story as a springboard to discuss our own Bing blacklist situation. I will work through his blog and highlight the points on which our experiences are the same (perhaps establishing a small trend) and some points on which our site’s situation differs from his.

Bing’s secret court issues sentences in January

Mr. Więckiewicz began his article with an introduction:

My personal site has been recently penalized by Bing, or if you prefer different naming for it – secretly blacklisted or shadowbanned. Don’t know exactly why but by the end of January 2023 I lost every indexed page that had been in Bing.

Dariusz Więckiewicz

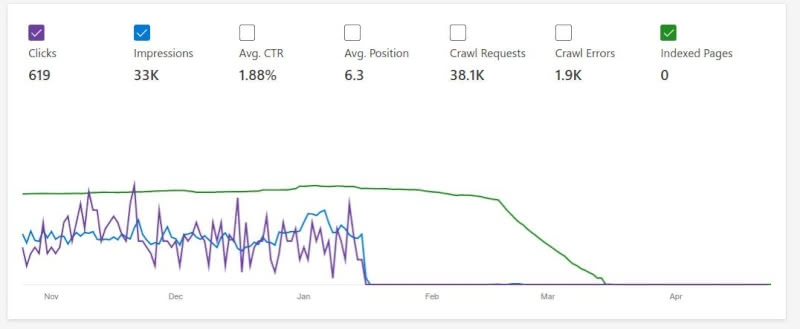

The introduction immediately caught my attention. The New Leaf Journal was added to Bing’s blacklist on January 14, 2023. You can see where the drop-off occurred.

While I cannot tell exactly when Mr. Więckiewicz’s site was unjustly added to Bing’s blacklist from the chart that he posted (see his article), it looks like it occurred in the first half of January, most likely within a few days of our blacklisting.

I discovered another very interesting tidbit after reviewing Mr. Więckiewicz’s blog. When we were first subjected to Bing’s blacklist, The New Leaf Journal’s web pages were still in Bing’s search index. However, I had not looked at our indexing status recently. Mr. Więckiewicz’s chart shows that his site’s web pages remained in Bing’s search index through mid-March, at which time every page was removed. I toggled on “Indexed Pages” in Bing’s Webmaster console and discovered that the exact same thing happened to The New Leaf Journal.

Curious.

The downstream effects of Bing blacklists

Mr. Więckiewicz initially was not concerned about Bing blacklisting his site. I will venture that his site, like ours, receives vastly more traffic from Google than it does by Bing. Thus, on the surface, one may join Mr. Więckiewicz in asking, “Why do I even bother?”

But New Leaf Journal readers will know that Bing bans affect more than Bing. Neither Mr. Więckiewicz’s website nor The New Leaf Journal were the first sites to meet Bing’s blacklist. Back on August 7, 2022, six months before we were added to Bing’s blacklist, I published an article inspired by another small independent website’s Bing blacklist story. In that article, I noted something that I had discussed previously while reviewing alternative search tools and examining independent alternative search solutions such as Peekier: Many alternative search tools source their results from Bing’s search index. Chief among these is DuckDuckGo. Mr. Więckiewicz explained the effect that the Bing blacklisting had on his encouraging users to search his site through DuckDuckGo:

Because of that, when you use the search on my website, if the results were not as expected I suggested a link to search it through DuckDuckGo. Until the issue is sorted I have been forced to change it back to Google.

Dariusz Więckiewicz

While I have discussed using DuckDuckGo for domain-specific searches in other contexts, I have generally been content to encourage visitors to use our own serviceable in-built search functionality. However, Bing’s blacklisting has affected our status with DuckDuckGo in the same way it affected Mr. Więckiewicz’s site: The New Leaf Journal is DuckDuckGone. Mr. Więckiewicz stated that he is “angry because of my lack of presence on DuckDuckGo,” thus indicating that his site, like our’s, had previously received (emphasis on the past) significant DuckDuckGo traffic.

The loss of DuckDuckGo traffic has been far more noticeable for The New Leaf Journal than the loss of Bing traffic. According to our privacy-friendly local analytics solution, Koko Analytics (see my review), since July 11, 2020, to the blacklisting, we received 3.2X more visitors from DuckDuckGo than we did from Bing. DuckDuckGo was our third most prolific referrer overall (Bing was fourth) behind Google and Hacker News. While losing DuckDuckGo has been the worst effect of the Bing blacklisting, Microsoft’s decision also erased us from Ecosia (15th ranked referrer), Yahoo (17th and 22nd for two different domains), and Qwant (26th).

I suspect that DuckDuckGo is a disproportionately significant issue for sites that publish content about tech and privacy. While our site is not as tech-focused as Mr. Więckiewicz’s, some of our best-performing articles are about free and open source software, online privacy, and content ownership – the sorts of issues that may be of interest to people who are most likely to opt for a privacy-focused search solution such as DuckDuckGo. While we received vastly more Google traffic than DuckDuckGo traffic, DuckDuckGo out-performed Google in New Leaf Journal referrals relative to its search engine market-share.

I maintain that there is an important place for alternative search tools such as DuckDuckGo and Peekier even though they are, to varying degrees, little more than front-ends for Bing’s search engines. They provide privacy benefits over big tech search engines such as Google and Bing (at least going by their privacy policies), and in some cases such as Peekier they may offer a unique experience with Bing’s foundation. Using Bing’s index (or in rarer cases Google’s, see Startpage as an example) makes it easier to design privacy-friendly search for general use-cases. However, the Bing dependency of so many alternative search issues comes with a cost. This cost does not only appear when sites like The New Leaf Journal or Mr. Więckiewicz’s blog are blacklisted, but also when Bing, which is operated by Microsoft, engages in questionable practices. For example, back in 2021, I discussed Microsoft’s decision to export the Chinese Communist Party-prompted censorship that Microsoft uses in China-based Bing abroad. I addressed how that decision affected Bing-based search tools such as DuckDuckGo, Qwant, and Swisscows. I expanded on this issue in January 2023 (after we were blacklisted) by highlighting other examples of Microsoft-CCP malfeasance regarding Bing. In doing so I criticized DuckDuckGo, which is affected by all upstream Bing censorship decisions, for misleading users by implying that it has more control over its search results than it actually does.

While alternative-search tools that use big tech proprietary search indexes have their uses, it is essential for them to be forthright about their reliance on upstream indexes.

Bing support is non-existent

Mr. Więckiewicz discovered that Bing Support “is simply non-existent.” I agree with him fully and will note that my experience with Bing Support is all square with his. Bing has a Support form and it allows Webmasters to raise issues regarding indexing. I have made several requests spaced at least two weeks apart. The best response I have been given is an automated acknowledgment that my request has been received along with a request code.

I will add one note to our experience with Bing Support. Following one of my multiple support requests, The New Leaf Journal immediately received a couple of visits from prod.uhrs.playmsn.com. I discovered an interesting comment on another Bing horror story by Mr. Relja Novović at Bike Gremlin. The commenter, who goes by Max, wrote:

Check if your website access logs contains prod.uhrs.playmsn.com in referrals, then your site has been manually banned, by some guy from india or south america, that system provides low-paid clickworker reviews metrics without feedbacks.

Commenter “Max”

Bing now looks like mafia.

Very interesting. UHRS stands for “Universal Human Relevance System,” and it is a Microsoft property. Microsoft touts it as “a crowdsourcing platform that supports data labeling for various AI application scenarios.” It also notes that it is used for “[s]earch relevance.” But Microsoft marketing aside, commenter Max’s description of it as a “system [which] provides low-paid clickworker review[] metrics without feedback[]” sounds about right. While I do not think that linking to clickworker work sites is the best idea for The New Leaf Journal, I will note that you can readily find information about UHRS from clickworker information sites and portals through basic search.

I will disagree with Max on one point, however. While UHRS clearly has some connection to Bing, I had seen it in my Koko Analytics referrers on occasion when I had no issues with Bing. However, I do not study my server logs in the sort of detail that Max is describing in the comment, so it is possible that I am missing some information about how UHRS clickworker metrics affect Bing’s indexing.

Diagnosing the problem

Mr. Więckiewicz describes his having made a good-faith effort to resolve the issue. I did the same. However, a good-faith effort only counts for something when both sides are acting in good faith. From Mr. Więckiewicz:

I started reading various things, and yes, I went through Bing Webmaster Guidelines. If you know my site a bit more you may see that is perfectly optimised and all requirements have been met so that wasn’t the issue.

Dariusz Więckiewicz

I too have reviewed the Bing Webmaster Guidelines and found nothing that would explain why The New Leaf Journal is being blacklisted. While I will not go so far as to say that my site is perfectly optimized (modesty prevents me from doing so), I note that The New Leaf Journal is performant (test using your tool of choice), lightweight, has no ads, and only hosts original writing content. The only SEO practices we engage in are trying to write interesting articles and giving them properly structured meta data.

Mr. Więckiewicz wondered whether “Microsoft disliked and decided to ban me behind the scene without acknowledging it.” He noted that he has written articles about Microsoft and Windows, “but all are legit and non of them relate[] to piracy.” While he could not find an obvious reason why Microsoft would target his site, he became suspicious because none of the other sites he works on aside from his personal blog/writing site were affected by Bing’s blacklisting. In his case, his site is a subdomain of wieckiewicz.org. His personal site, dariusz., was blacklisted, while his daughter’s site, anna., was not, leading to the conclusion that his specific site was singled out rather than the entire domain.

(This information from Mr. Więckiewicz is very useful. thenewleafjournal.com is obviously not a sub-domain. I assumed our domain as a whole was blacklisted, and that it would extend to subdomains. However, knowing that this may not be the case, I can apply subdomains of thenewleafjournal.com, not to mention newleafjournal.com, to side projects without those projects necessarily being blacklisted by Bing and all front-ends thereof.)

I have made little secret of the fact that I do believe that Microsoft and other big tech companies engage in inappropriate censorship, including on behalf of foreign authoritarian governments. Moreover, I think they make censorship decisions stemming from their uncomfortably close relationships with power players in our own government. While my censorship-related disagreements with the big tech companies have not been a common topic here at The New Leaf Journal, I did clearly criticize Microsoft for its pro-CCP censorship (while discrediting its excuses), and I have addressed involving the intersection of big tech and political censorship.

But with that being said, I doubt that The New Leaf Journal was specifically singled out on political grounds or for criticizing Microsoft. All of the articles I noted, including those criticizing Microsoft, were indexed by Bing for several years. In fact, the only case in which I suspected that an article I wrote was not being indexed for censorship-adjunct reasons was when Google inexplicably failed for more than six months to index my review of /e/ OS, a fork of a fork of Android, which has a mission statement explicitly criticizing Google. Google only indexed my /e/ OS review after it had made page one of Hacker News almost immediately after my separate post questioning Google.

However, I speak only for The New Leaf Journal in tentatively assuming that Microsoft did not single it out for anti-Microsoft opinions. It is entirely possible that Mr. Więckiewicz’s site, with which I am less familiar with, was singled out by Microsoft on more nefarious grounds. In any event, I have published more explicitly anti-Microsoft content since we were blacklisted. (This is not because I was holding back before, but rather because Microsoft annoyed me.)

Mr. Więckiewicz decided to consult Bing’s so-called “AI” chatbot to see if it would tell him what the problem is. One of my post-blacklist articles criticizing Microsoft asked whether Bing’s AI chatbot would train on information from websites that Bing is preventing from appearing to searchers. I suspected that it would, and Mr. Więckiewicz’s predictably quixotic exchange with Bing’s chatbot does little to disabuse me of my hunch.

One exchange between Mr. Więckiewicz and the bot caught my attention. Bing’s chatbot incorrectly informed Mr. Więckiewicz that his site had no links pointing to it. Mr. Więckiewicz noted that Google Search Console shows many links. Here, I think Bing’s chatbot is inadvertently playing a word game. Assuming arguendo that Bing’s chatbot is consulting Bing’s index for information about Mr. Więckiewicz’s site, we saw that Bing removed all of Mr. Więckiewicz’s pages from its index in March. Thus, the chatbot can state that it found no links because Bing’s index contains no pages to link to.

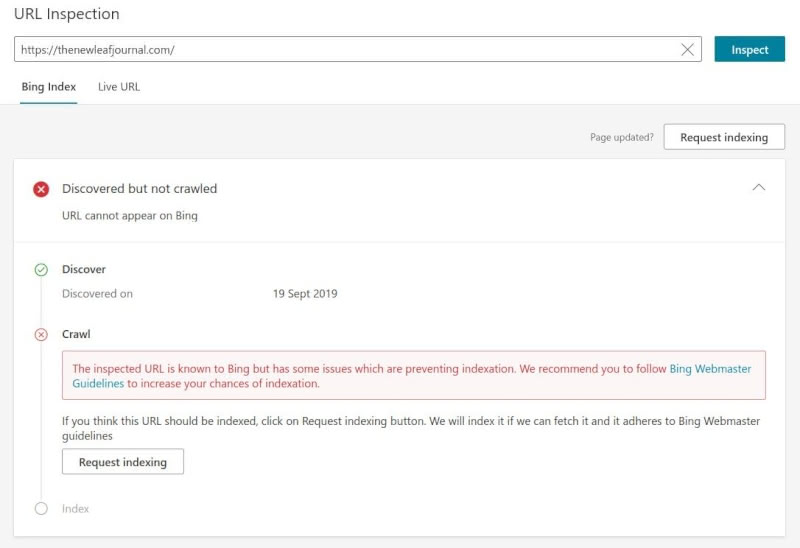

Next, Mr. Więckiewicz discusses an issue that I also have. Bing has a tool called “URL inspection.” This allows one to enter any URL and see how it appears in Bing. Mr. Więckiewicz showed the confusing URL inspection results he receives. I have the same issue. I will test my homepage just to ensure that we are using a New Leaf Journal page that was previously in Bing’s index.

Bing says our homepage is discovered but not crawled. Moreover, we are told that there are “issues which are preventing indexation” and directs me to the useless Bing Webmaster Guidelines (thanks!).

(Note I have no idea why Bing discovered our homepage on September 19, 2019, which was before The New Leaf Journal was live. While there was a previous thenewleafjournal.com, that site appears to have been last live in 2015 or 2016. We have owned the domain since 2018.)

Here is the confusing part, however. That first test was simply the current status of the page. I can also test the live URL – which sends Bing to the page to see if it can be indexed. Check out this test from 11:59 PM on April 24, 2023:

According to Bing, my homepage is not indexed because issues prevent it from being crawled. But a live test reveals that the URL can be indexed by Bing “given it passes quality checks.” What quality check is it “failing”? No one knows! Note that I confirmed this issue as early as February, but I am including the screenshots to show that my experience is the same as Mr. Więckiewicz’s. Like Mr. Więckiewicz, I have tried the “Request Indexing” button, but my multiple requests have gone unheeded.

One solution that Mr. Więckiewicz tried that I will not try was removing his site from his webmaster account and then re-adding it. I would have tried it if he discovered that it worked, but alas, the proverbial reset trick did not resolve his problem.

Finally, Mr. Więckiewicz wondered whether his site had been classified as a spam blog, or splog, by Bing. From the Hacker News discussion, I gathered that this refers to a tactic by nefarious sites to create spam links to legitimate sites, thereby, intentionally or indirectly, causing search engines to flag the legitimate sites. When we were first blacklisted by Bing, I considered this possibility and went through the backlinks that Bing had discovered pointing to The New Leaf Journal. However, we do not have a large number of spam backlinks (at least my impression). The number was manageable enough that I only took about 10-20 minutes to comb through our backlinks and disavow the obvious spam, most of which were from web pages that were no longer online. As you know, this did not fix any problems. At the moment, Bing shows that we have no backlinks because it removed all of our pages from its search index. For these reasons, I doubt that The New Leaf Journal was classified as a spam blog on account of shady backlinks.

(Note that we do not do any “link building” other than trying to publish interesting articles and my posting links to our articles on Mastodon, Pixelfed, Twitter, Minds, my BearBlog blog, and a couple of other spinoff sites that I am working on. See the collection.)

Something to January?

Mr. Więckiewicz discovered through his research that “something changed in Bing requirements around January.” It turns out that we are not the only sites having this issue. Mr. Więckiewicz links to a post by Mr. Dave Rupert, whose web developer site was also banned by Bing in January. Mr. Rupert, who has apparently seen some success in resolving the issue, notes that several other small writing sites were blacklisted in January, including the site of Mr. Kev Quirk, who previously published an article which inspired me to create a running list of all of our articles.

I had not previously seen Mr. Rupert’s article, but I was curious whether he had a solution for me since it appears that his site may be returning to Bing’s index. However, he generally took the same steps that I took. I will focus on one of Mr. Rupert’s recommendations in the next section though.

The genesis of my issue

One point on which I differ from the other Bing Webmaster accounts is that my blacklisting followed a specific action. I possess webmaster tools for Google, Bing, Yandex, and Seznam (I arguably have Naver Webmaster Tools but that is a long story). Like Mr. Więckiewicz, I used my Google Webmaster account to verify site ownership for Bing, albeit I did so in 2020. The only Webmaster account I check regularly is Google. This is because Google’s Webmaster Tools are somewhat useful (unlike Bing’s) and because I receive vastly more traffic from Google. I spent some time cleaning up our presence in Yandex after the Bing blacklisting, but Yandex is a very minor part of our web presence compared to Bing, much less Google.

I had been working on the site quite a bit in early January. One significant change is that I switched to using the child theme version of our WordPress theme. Since I was on a site-work kick, I decided to check in on Bing Webmaster for the first time in a while. There were no issues. All was well with Bing and we were doing fairly well (by our standards) with both Bing and DuckDuckGo. Early January saw some of our most consistent traffic to date.

I decided to trigger a Site Scan using Bing’s Webmaster Tools. I was generally content with monitoring Google for issues, but perhaps Bing would highlight some other points for improvement. I received my results the next morning – at which time I noticed that we were blacklisted by Bing (I was initially tipped off when I saw only one DuckDuckGo visitor for the day at about 11:00 AM).

Unfortunately, Bing did not keep my January 13 scan results (there may be a retention limit), but it flagged me for having a duplicate meta tag issue. Without going into too much detail, our site has hidden site-wide meta information (not hidden if you view page source) in HTML tags. You only want one set of meta tags. I use an SEO plugin for site meta tags. For that reason, I disabled the option to have our WordPress theme output the tags. I quickly determined that I had forgotten to turn off theme meta tags when I switched from our parent theme to child theme.

When we were first blacklisted, I suspected that the meta tag issue could be the culprit – notwithstanding the fact that it is relatively minor flaw and that we were not dinged for it by Google or Yandex. I quickly resolved the issue, flushed our cache, and confirmed on Bing that it was resolved. While I was in the area, I worked on resolving minor SEO issues that Bing flagged, including missing meta descriptions and alt attributes for images. As you know, none of these fixes resolved my indexing problem.

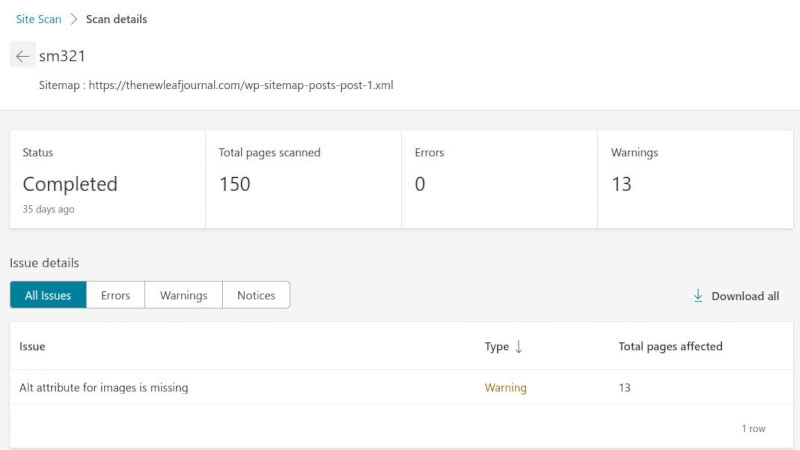

I subsequently ran a few more site scans, resolving minor issues as Bing identified them. See the results from scanning 150 pages from our post sitemap on March 21:

My most recent scan one month ago revealed 13 pages out of 150 with at least one image missing alt attributes. That is all. I added those alt attributes.

I have come to suspect that meta tags were not the reason for the blacklisting.

Mr. Rupert described his own meta tag fix:

I fixed my description crawl errors by adding a bunch of redundant descriptions. I don’t think this is good SEO, but at a minimum I’ve cleared all “Errors” from the Bing Webmaster Tools Site Scan. I’ll go back through and make this better but I’m trying to see if I can trigger a successful indexing.

Dave Rupert

I did not have the same meta tag issue, and I would not have undertook this solution. But it seems to be working for his site, so I will include it here as a point of interest.

Returning to Mr. Więckiewicz, he ran a full site scan and was confronted with various minor SEO issues – not the sort of issues that should result in a quality original writing blog like his being blacklisted by Bing, which indexes many low quality click farm sites. But despite the absurdity of the situation, Mr. Więckiewicz did what I did – he made a good faith effort to resolve the issues.

This really is Bing being Bing

Having worked through Mr. Więckiewicz’s excellent first-hand account of Bing being Bing, I will add an additional note.

The Bing blacklisting has eliminated The New Leaf Journal from Bing and all search tools that use Bing’s index. But how are we doing elsewhere? One would think if Bing had discovered a serious problem with The New Leaf Journal, other search engines would have flagged us too.

This is not the case.

Google is the most-used search engine in the world. It has its own search index. The New Leaf Journal has no indexing issues with Google whatsoever. Moreover, we are receiving more traffic from Google in early 2023 than we had in previous months and years. We are not only indexed by Google, we are doing well in Google against our own benchmarks.

I have webmaster tools for Yandex. Yandex is a Russian-first search engine with a full English version. Yandex is often more finicky about indexing than Google and Bing, as I discovered in 2020. However, we have no indexing issues whatsoever with Yandex. We are also receiving more visitors from Yandex than we had in previous months (however, Yandex referrals are modest even when compared to Bing and usually behind Brave, discussed below). I will add, as I explained in a post, that Yandex Webmaster Tools distinguish themselves from Bing’s by actually being useful. If Yandex has an issue with the site, it tells you. It also provides clear explanations of the issues – so clear that I was able to fix a strange, very particular-to-Yandex crawling issue by adding a line to our robots.txt file based solely on its Webmaster guidance. On one occasion when I needed to fix something, I did not need to send a support request into the void, Yandex affirmatively offered support to my webmaster email account and then answered my question clearly and expeditiously. Novel!

We have seen occasional referrals from Baidu, the largest search engine in China, which has its own index. I genuinely have no idea what our Baidu indexing status is since the entire UI is in Mandarin and while it does technically offer webmaster tools, they are not accessible without an invitation (I think I have published a few things that would make such an invitation unlikely, notwithstanding the fact I only speak English). However, the fact we have more Baidu referrals since January 14, 2023, than Bing and DuckDuckGo referrals combined suggests that Baidu also has not blacklisted us.

Brave Search says that it uses its own search index. While there are some questions about Brave’s actual independence from Google and Bing, including one that I raised here, its results are distinguishable from both. Brave does not have webmaster tools, so I am flying a bit blind in the analysis. However, we are not only indexed by Brave, but performing as well or better than we were in the months preceding the Bing ban. At least we know it is not Bing.

Mojeek is an independent search engine with its own crawler and index. It is run by a great team. We have been in Mojeek’s index since spring 2021. We remain in Mojeek’s index.

Seznam is a Czech-first search engine with its own index and crawler. I have webmaster tools for it, but the UI is in Czech and the information, at last check-in, is lacking, so I never consult them. However, The New Leaf Journal was, and remains, in its index.

Had we begun to have indexing issues with other search engines when Bing blacklisted us, I would have been forced to conclude that the problem was The New Leaf Journal. However, when every other independent search index that had us before continues to have us, I am forced to conclude that this is a Microsoft Bing problem instead of a me problem.

Conclusion: The path forward

I have largely given up on fixing the Bing issue because it appears to be out of my hands. My position going in was that notwithstanding Bing being Bing, the onus was on me to try to improve The New Leaf Journal and resolve issues. While our site is not absolutely perfect (missing meta descriptions on categories, uncertainty about whether JSON schema is showing up for all crawlers on articles, etc), I think our site is built on a good foundation. Our pages load quickly without relying on CDNs, they work entirely without JavaScript, we do not use ads or third party scripts or trackers, and we produce original writing content on many subjects. I leave judgment of our site’s quality to the readers, but I can confidently say that our fundamentals in terms of performance, privacy, and search engine friendliness are solid.

This is all to say that I have done my part to resolve Bing’s problems. I made sure to resolve the initial issues before even trying to contact Bing. Moreover, in my support requests, I identified what I had done to try to resolve the initial Site Scan issues. Google has no issue with us. Nor does Yandex, Brave, Mojeek, Seznm, or various other small search engine projects. Having tried to guess what Bing’s problem could be, I see little reason to upset the apple cart with everyone else for uncertain prospects with Bing.

With that being said, I will continue to keep an eye out for more Bing information, and may see if mass submitting our newest URLs does anything. If the issue is not resolved by June, I may move to blacklist Microsoft’s clickworker farm – why should I allow their farm if they will not index my site?

The loss of Bing is unfortunate for several reasons. While I prefer some ways of searching to others, people have their own search tools. Many people (with poor taste) use Bing directly. Some do so because they want to try its (objectively dumb so-called) AI functionality. Others, like me for years, use DuckDuckGo as a privacy-focused general-use search alternative. Some prefer other privacy Bing front-ends to DuckDuckGo. But no matter what people use to search, I would like them to be able to find The New Leaf Journal. It is for this reason that I not only targeted Google back in 2020 when I first started, but also Bing. I originally sought Yandex webmaster tools because I saw that they were easily available and in English and because, at that time, DuckDuckGo had a small (very specific) Yandex reliance (we have ended up receiving more Yandex visitors than I would have expected). It is for this reason that despite all of my critiques of Chinese Communist Party censorship, I do not block Baidu’s search crawlers – why would I want to make it less likely that ordinary internet users and readers in China, or Russia in the case of Yandex, can enjoy (I hope, at least) our perennially virid writing content? It is why I make sure that we are allowing important independent search engines to crawl our site.

But alas, what and why Microsoft Bing chooses to do what it does is out of my hands. I assume we will be released from the Bing blacklist eventually, but at this stage I doubt it will be on account of anything I do. If anything, the situation, which I estimate has cost us somewhere in the neighborhood of 30% of our page views (I have come to suspect through inductive reasoning DuckDuckGo visitors have lower bounce rates than Google visitors), highlights that reliance on search engines for visitors comes with some of the same issues – albeit less severe – that reliance on social media comes with (we rely very little on social media). For this reason, I will simply work harder and do better – both in terms of producing more interesting articles and also in creating new ways for visitors to discover and follow all of the writing at The New Leaf Journal and its sister projects.

I conclude by expressing my gratitude to Mr. Więckiewicz for taking the time to share his Bing Jail story on his blog and thus inspiring me to stop being lazy and share my own (with an assist from his post). I am glad to see that people who are having the same issue are sharing their experience, and I hope that my throwing The New Leaf Journal’s hat into the ring may help other webmasters. With my story now shared, I look forward to returning to our normal Microsoft-bashing and other interesting articles tomorrow.